How to leverage indexes for `ILIKE` & `LIKE` queries

You cannot always avoid LIKE or ILIKE queries when it comes to solving

specific business requirements. However, it does not mean that those queries

have to be slow. Lets look at how the keywords affect query usage, and what

can be done to optimise the query plans and the impact each option has on

the overall performance of the database.

LIKE, ILIKE and why you might need to use them

LIKE and ILIKE operators allow you to use wildcards in your queries to

provide a level of fuzzy-matching should you require it. The wildcard in SQL

syntax is represented by the percent symbol: %.

A very common use-case is to find all names or emails matching a prefix,

suffix or both. Generally this kind of query can be optimised such that we

avoid the LIKE or ILIKE syntax which can have pretty impactful performance

considerations.

The difference between LIKE and ILIKE is that the ILIKE operator will

ignore the case of the strings which it compares, ie: it will match AbC with

a record containing abc or aBc - this is particularly useful anytime you

are dealing with user input - as case consistency is not a given.

Alternatives to LIKE and ILIKE

Firstly, if you need to check for only a prefix - you can actually trim both strings to the desired length and perform an equality check, which can use an existing index and yield really good performance. The same can be applied if you also need to ignore casing, you can create an index which transforms the column - resulting in slightly more work at INSERT time but better performance for reads.

CREATE INDEX CONCURRENTLY IF NOT EXISTS idx_lower_name ON

"users" (lower(name));

Allowing us to write a query like this:

SELECT *

FROM users

WHERE lower(name) = ?

The above query will be able to use the index defined above, and as long as we ensure that we pass a string which has been lower-cased from the calling point, we are ensuring great performance by using the index.

Another alternative is using the citext extension - which stands for case

insensitive text, which can be used as a drop-in replacement for text columns.

However, if you are expecing to have letters that have accents, those will not

be normalized to the non-accented letter. There is also a small storage overhead

to be paid when using citext - but it allows you to avoid the

LOWER(...) calls on your queries.

Optimising LIKE and ILIKE queries

LIKE queries which check only for prefixes, eg: LIKE 'alex%' - can make use

of existing BTree indexes to speed up their performance and do an IndexScan

which can plug some gaps. ILIKE cannot use BTree indexes and as such cannot

be optimised from.

How the query plan will look like for hitting an IndexScan for a LIKE query:

EXPLAIN ANALYZE

SELECT *

FROM emails

WHERE LOWER(recipient_email) LIKE 'alex%'

LIMIT 5;

QUERY PLAN

-------------------------------------------------------------------------------------------------------------------------------------------------------------------

Limit (cost=0.43..129.79 rows=5 width=1370) (actual time=61.852..1837.272 rows=5 loops=1)

-> Index Scan using idx_lower_rec_email on emails (cost=0.43..1472852.26 rows=56930 width=1370) (actual time=61.851..1837.266 rows=5 loops=1)

Filter: (lower((recipient_email)::text) ~~ 'alex%'::text)

Rows Removed by Filter: 2079

Planning Time: 0.165 ms

Execution Time: 1842.558 ms

(6 rows)

However, sometimes you cannot get away with just a single non-leading wildcard, and you require more power.

Trigrams & pg_trgm for multiple wildcards

Enter trigrams - via the pg_trgrm extension, which splits up the words into

3 letter segments, allowing much more granularity when it comes to querying.

You will also need to use a GIN or GiST index to be able to leverage the

trigrams.

[Trigrams for "Charlie"]

+-------+

| cha |

| har |

| arl |

| rli |

| lie |

+-------+

[Trigram GIN Index]

+---------+------------+

| Trigram | Row IDs |

+---------+------------+

| cha | {3} |

| har | {3} |

| arl | {3} |

| rli | {3} |

| lie | {3} |

+----------------------+

This will allow queries like SELECT * FROM users WHERE name LIKE "%arl%" to

have very very fast results.

However, it is obviously going to come at a pretty large cost in terms of storage,

as now instead of storing a single reference to the name, you have split it up

in threes:

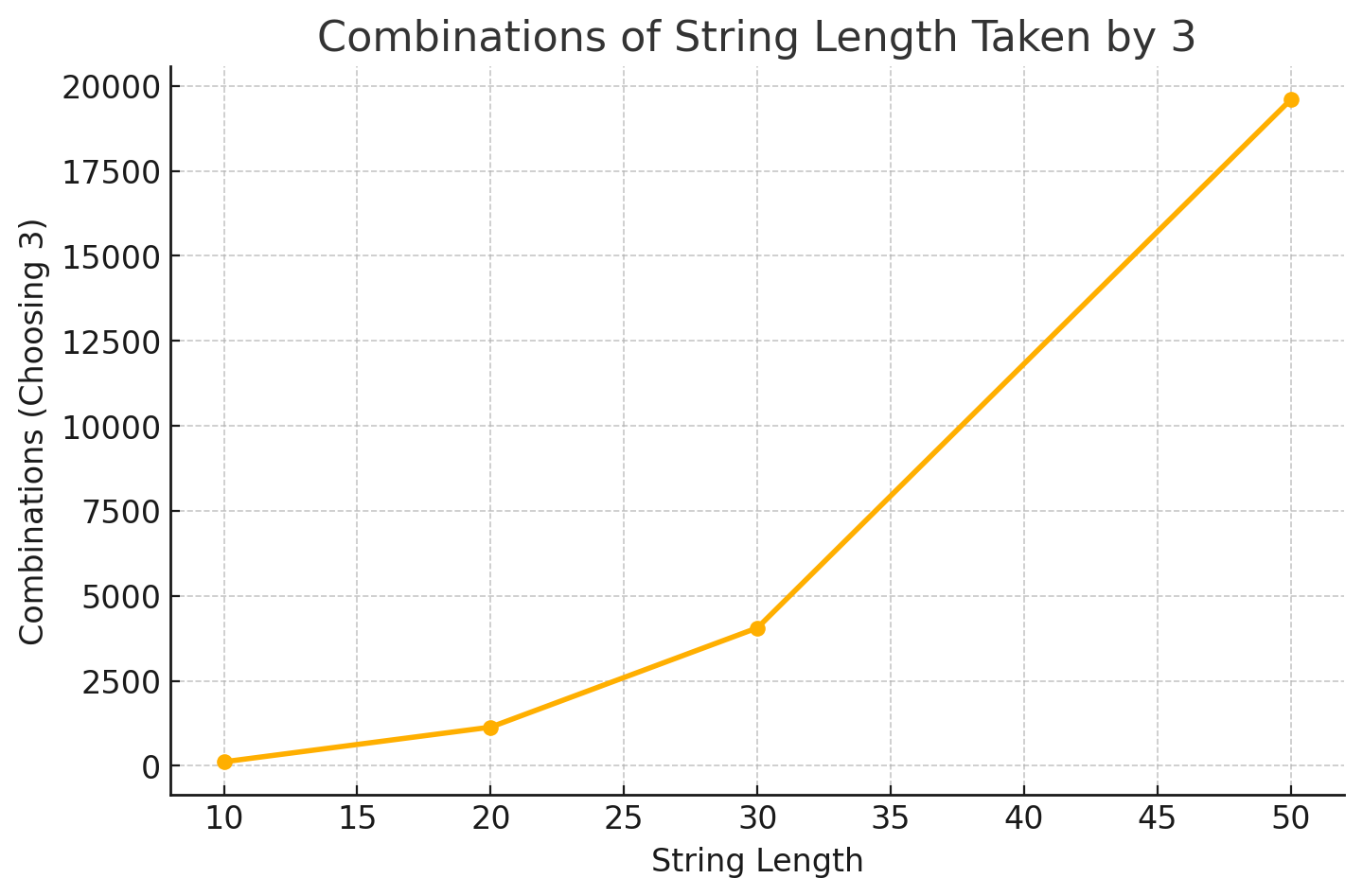

This is a classic exponential situation which:

Storage considerations aside, you have to consider that the trigrams have to be computed at INSERT time and saved in the index. This will increase the latency of the data ingestion, however trading that off for the faster data lookups.

Recommendations

- Try to use

LOWER(...) =functions if possible to do prefix matching, rather than aLIKE "alex%". Create an index that computes theLOWER(...)and can be used to speed up these queries. - Use

citextif you require case-insensitive, prefix-lookups and want to avoid theLOWER(...)changes. - Use

pg_trgmfor short strings which require multiple wildcards. Be mindful of the insert time overhead.